Rust: a future for real-time and safety-critical software without C or C++

Tue 20 August 2019Overview

Rust is a fairly new programming language that I'm really excited about. I gave a talk about it to my coworkers, primarily aimed at C++ programmers. This is that talk translated to a blog post. I hope you will be excited about Rust too by the end of this blog post!

A quick overview: Rust is syntactically similar to C++, but semantically very different. It's heavily influenced by functional programming languages like Haskell and OCaml. It's statically typed, with full type inference, rather than the partial type inference that C++ has. It's as fast as C and C++ while making guarantees about memory safety that are impossible to make in those languages.

house[-1]

Before we look at Rust in any more detail, I want you to imagine yourself in a scenario. Imagine that you are a builder setting up the gas supply in a new house. Your boss tells you to connect the gas pipe in the basement to the gas main on the pavement. You go downstairs, and find that there's a glitch: this house doesn't have a basement!

So, what do you do? Perhaps you do nothing, or perhaps you decide to whimsically interpret your instruction by attaching the gas main to some other nearby fixture, like the air conditioning intake for the office next door. Either way, suppose you report back to your boss that you're done.

KWABOOM! When the dust settles from the explosion, you would be guilty of criminal negligence.1

Yet this is exactly what happens in some programming languages. In C you could have an array, or in C++ you could have a vector, ask for the -1 index, and anything could happen. The result could be different any time you run the program, and you might not realise that something is wrong. This is called undefined behaviour, and the possibility of it can't be eliminated entirely, because low-level hardware operations are inherently unsafe, but it's something that is protected against in many languages, just not C and C++.

The lack of memory safety guarantees from these languages, and the ease with which undefined behaviour can be invoked, is terrifying when you think of how much of the world runs on software. Heartbleed, the famous SSL vulnerability, was due to this lack of memory safety; Stagefright, a famous Android vulnerability, was due to undefined behaviour from signed integer overflow in C++.

Vulnerabilities aren't the only concern. Memory safety is crucial to both the correctness and reliability of a program. No one wants their program to crash out of nowhere, no matter how minor the application: reliability matters. As for correctness, I have a friend who used to work on rocket flight simulation software and they found passing in the same initialisation data but with a different filename gave you a different result, because some uninitialised memory was being read, it happened to read the program argument's memory, and so the simulation was seeded with garbage values based on the filename. Arguably their entire business was a lie.

So why not use memory safe languages like Python or Java?

Languages like Python and Java use garbage collection to automatically protect us from bad memory accesses, like

-

use-after-frees (when you access memory that has been deallocated)

-

double frees (when you release memory that's already been released, potentially corrupting the heap)

-

memory leaks (when memory that isn't being used is never released. This isn't necessarily dangerous but can cause your system to crash and destroy performance.)

Languages like Python and Java protect from these situations automatically. A garbage collector will run as part of the JVM or the Python interpreter, and periodically check memory to find unused objects, releasing their associated resources and memory.

But it does this at great cost. Garbage collection is slow, it uses a lot of memory, and crucially it means that at any point – you don't know when – the program will halt – for how long, you don't know – to clean up the garbage.

memory safe at the cost of speed and determinism

**C and C++**

fast and deterministic at the cost of memory safety

This lack of predictability makes it impossible to use Python or Java for real-time applications, where you must guarantee that operations will complete within a specified period of time. It's not about being as fast as possible, it's about guaranteeing you will be fast enough every single time.

So of course, there are social reasons why C and C++ are popular: it's what people know and they've been around a long time. But they are also popular because they are fast and deterministic. Unfortunately, this comes at the cost of memory safety. Even worse, many real-time applications are also safety critical, like control software in cars and surgical robots. As a result, safety critical applications often use these dangerous languages.

For a long time, this has been a fundamental trade-off. You either get speed and predictability, or you get memory safety.

Rust completely overturns this, which is what makes it so exciting and notable.

What this blog post will cover

These are the questions I hope to answer in this post:

-

What are Rust's design goals?

-

How does Rust achieve memory safety?

-

What does polymorphism look like in Rust?

-

What is Rust tooling like?

What are Rust's design goals?

-

Concurrency without data races

-

Concurrency happens whenever different parts of your program might execute at different times or out of order.

-

We'll discuss data races more later, but they're a common hazard when writing concurrent programs, as many of you will know.

-

-

Abstraction without overhead

- This just means that the conveniences and expressive power that the language provides don't come at a run time cost, it doesn't slow your program down to use them.

-

Memory safety without garbage collection

- We've just talked about what these two terms mean. Let's have a look at how Rust achieves this previously contradictory pairing.

Memory safety without garbage collection

How Rust achieves memory safety is simultaneously really simple and really complex.

It's simple because all it involves is enforcing a few simple rules, which are really easy to understand.

In Rust, all objects have an owner, tracked by the compiler. There can only be one owner at a time, which is very different to how things work in most other programming languages. It ensures that there is exactly one binding to any given resource. This alone would be very restrictive, so of course we can also give out references according to strict rules. Taking a reference is often called "borrowing" in Rust and I'm going to use that language here.

The rules for borrowing are:

Any borrow must last for a scope no greater than that of the owner.

You may have one or the other of these two kinds of borrows, but not both at the same time:

one or more immutable references to a resource OR exactly one mutable reference.

The first rule eliminates use-after-frees. The second rule eliminates data races. A data race happens when:

-

two or more pointers access the same memory location at the same time

-

at least one of them is writing

-

and the operations aren't synchronised.

The memory is left in an unknown state.

We didn't have a heap when I worked as an embedded engineer, and we had a hardware trap for null pointer derefs. So a lot of common memory safety issues weren't a major concern. Data races were the main type of bug I was really scared of. Races can be difficult to detect until you make a seemingly insignificant change to the code, or there's a slight change in the external conditions, and suddenly the winner of the race changes. A data race caused multiple deaths in Therac-25 when patients were given lethal doses of radiation during cancer treatment.

As I said, these rules are simple and shouldn't be surprising for anyone who's ever had to deal with the possibility of data races before – but when I said that they are also complex, I meant it is incredibly smart that Rust is able to enforce these rules at compile time. This is Rust's key innovation.

There are some memory-safety checks that have to be runtime – like array bounds checking. But if you are writing idiomatic Rust you'll almost never need these – you wouldn't usually be directly indexing an array. Instead you'd be using higher-order functions like fold, map and filter – you will be familiar with this type of function if you've written Haskell/Scala or even Python.

unsafe Rust

I mentioned earlier that the possibility of undefined behaviour isn't something that can be eliminated entirely, due to the inherently unsafe nature of low level operations. Rust allows you to do such operations within specific unsafe blocks. I believe C# and Ada have similar constructs for disabling certain safety checks. You'll often need this in Rust when doing embedded programming, or low level systems programming. The ability to isolate the potentially unsafe parts of your code is incredibly useful – those parts will be subject to a higher level of scrutiny, and if you have a bug that looks like a memory problem, those will be the only place that can be causing it, rather than absolutely anywhere in your code.

The unsafe block doesn't disable the borrow checker, which is the part of the compiler that enforces the borrowing rules we just talked about – it just allows you to dereference raw pointers, or access/modify mutable static variables. The benefits of the ownership system are still there.

Revisiting ownership

Speaking of the ownership system, I want to compare it with ownership in C++.

Ownership semantics in C++ changed the language drastically when C++11 came out. But the language paid such a high price for backwards compatibility. Ownership, to me, feels unnaturally tacked onto C++. The previously simple value taxonomy was butchered by it2. In many ways it was a great achievement to massively modernise such a widely used language, but Rust shows us what a language can look like when ownership is a core design concept from the beginning.

C++'s type system does not model object lifetime at all. You can't check for use-after-frees at compile time. Smart pointers are just a library on top of an outdated system, and as such can be misused and abused in ways that Rust just does not allow.

Let's take a look at some (simplified) C++ code I wrote at work, where this misuse occurs. Then we can look at a Rust equivalent, which (rightly) doesn't compile.

Abusing smart pointers in C++

1 #include <functional> 2 #include <memory> 3 #include <vector> 4 5 std::vector<DataValueCheck> createChecksFromStrings( 6 std::unique_ptr<Data> data, 7 std::vector<std::string> dataCheckStrs) { 8 9 auto createCheck = [&](std::string checkStr) { 10 return DataValueCheck(checkStr, std::move(data)); 11 }; 12 13 std::vector<DataValueCheck> checks; 14 std::transform( 15 dataCheckStrs.begin(), 16 dataCheckStrs.end(), 17 std::back_inserter(checks), 18 createCheck); 19 20 return checks; 21 }

The idea of this code is that we take some strings defining some checks to be performed on some data, e.g. is a value within a particular range. We then create a vector of check objects by parsing these strings.

First, we create a lambda that captures by reference, hence the ampersand. The unique pointer to the data is moved in this lambda, which was a mistake.

We then fill our vector with checks constructed from moved data. The problem is

that only the first move will be successful. Unique pointers are move-only.

So after the first loop in std::transform, probably the unique pointer is

nulled (the standard only specifies that it will be left in a valid but unknown

state, but in my experience with Clang it's generally nulled).

Using that null pointer later results in undefined behaviour! In my case, I got a segmentation fault, which is what will happen on a nullptr deref on most hosted systems, because the zero memory page is usually reserved. But this behaviour certainly isn't guaranteed. A bug like this could in theory lie dormant for a while, and then your application would crash out of nowhere.

The use of the lambda here is a large part of what makes this dangerous. The compiler just sees a function pointer at the call-site. It can't inspect the lambda the way it might a standard function.

For context on understanding how this bug came about, originally, we were using a shared_ptr to store the data, which would have made this code fine. We wrongly thought we could store it in a unique_ptr instead, and this bug came about when we made the change. It went unnoticed in part because the compiler didn't complain.

I'm glad this happened because it means I can show you a real example of a C++ memory safety bug that went unnoticed by both me and my code reviewer, until it later showed up in a test3. It doesn't matter if you're an experienced programmer – these bugs happen! And the compiler can't save you. We must demand better tools, for our sanity and for public safety. This is an ethical concern.

With that in mind, let's look at a Rust version.

In Rust, that bad move is not allowed

1 pub fn create_checks_from_strings( 2 data: Box<Data>, 3 data_check_strs: Vec<String>) 4 -> Vec<DataValueCheck> 5 { 6 let create_check = |check_str: &String| DataValueCheck::new(check_str, data); 7 data_check_strs.iter().map(create_check).collect() 8 }

This is our first look at some Rust code. Now is a good time for me to mention

that variables are immutable by default. For something to be modifiable, we

need to use the mut keyword – kind of like the opposite of const in C

and C++.

The Box type just means that we've allocated on the heap. I chose it here because unique_ptrs are also heap allocated. We don't need anything else to be analogous with unique_ptr because of Rust's rule about each object having only one owner at a time.

We're then creating a closure, and then using the higher order function map

to apply it to the strings. It's very similar to the C++ version, but less

verbose.

But! This doesn't compile, and here's the error message.

1 error[E0525]: expected a closure that implements the `FnMut` trait, but this closure only implements `FnOnce` 2 --> bad_move.rs:1:8 3 | 4 6 | let create_check = |check_str: &String| DataValueCheck::new(check_str, data); 5 | ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^----^ 6 | | | 7 | | closure is `FnOnce` because it moves 8 | | the variable `data` out of its environment 9 | this closure implements `FnOnce`, not `FnMut` 10 7 | data_check_strs.iter().map(create_check).collect() 11 | --- the requirement to implement `FnMut` derives from here 12 13 error: aborting due to previous error 14 15 For more information about this error, try `rustc --explain E0525`.

One really great thing about the Rust community is there's a really strong focus on making sure there's lots of resources for people to learn, and on having readable error messages. You can even ask the compiler for more information about the error message, and it will show you a minimal example with explanations.

When we create our closure, the data variable is moved inside it because of the

one owner rule. The compiler

then infers that the closure can only be run once: further calls are illegal as

we no longer own the variable. Then, the function map requires a callable

that can be called repeatedly and mutate state, so the compilation fails.

I think this snippet shows how powerful the type system is in Rust compared to C++, and how different it is to program in a language where the compiler tracks object lifetime.

You'll notice that the error message here mentions traits: "expected a closure that implements FnMut trait", for example. Traits are a language feature that tell the compiler what functionality a type must provide. Traits are Rust's mechanism for polymorphism.

Polymorphism

In C++ there's a lot of different ways of doing polymorphism, which I think contributes to how bloated the language can feel. There's templates, function & operator overloading for static polymorphism, and subtyping for dynamic polymorphism. These can have major downsides: subtyping can lead to very high coupling, and templates can be unpleasant to use due to their lack of parameterisation.

In Rust, traits provide a unified way of specifying both static and dynamic interfaces. They are Rust's sole notion of interface. They only support the "implements" relationship, not the "extends" relationship. This encourages designs that are based on composition, not implementation inheritance, leading to less coupling.

Let's have a look at an example.

1 trait Rateable { 2 /// Rate fluff out of 10 3 /// Ratings above 10 for exceptionally soft bois 4 fn fluff_rating(&self) -> f32; 5 } 6 7 struct Alpaca { 8 days_since_shearing: f32, 9 age: f32 10 } 11 12 impl Rateable for Alpaca { 13 fn fluff_rating(&self) -> f32 { 14 10.0 * 365.0 / self.days_since_shearing 15 } 16 }

There's nothing complicated going on here, I decided a simple but fun example

was best. First, we're defining a trait called Rateable. For a type to be

Rateable, it has to implement a function called fluff_rating that returns a

float.

Then we define a type called Alpaca and implement this interface for it. We

could do the same for another type, say Cat!

1 enum Coat { 2 Hairless, 3 Short, 4 Medium, 5 Long 6 } 7 8 struct Cat { 9 coat: Coat, 10 age: f32 11 } 12 13 impl Rateable for Cat { 14 fn fluff_rating(&self) -> f32 { 15 match self.coat { 16 Coat::Hairless => 0.0, 17 Coat::Short => 5.0, 18 Coat::Medium => 7.5, 19 Coat::Long => 10.0 20 } 21 } 22 }

Here you can see me using pattern matching, another Rust feature. It's similar in usage to a switch statement in C but semantically very different. Cases in switch blocks are just gotos; pattern matching has required coverage completeness. You have to cover every case for it to compile. Plus you can match on ranges and other constructs that makes it a lot more flexible.

So, now that we've implemented this trait for these two types, we can have a generic function4.

1 fn pet<T: Rateable>(boi: T) -> &str { 2 match boi.fluff_rating() { 3 0.0...3.5 => "naked alien boi...but precious nonetheless", 4 3.5...6.5 => "increased floof...increased joy", 5 6.5...8.5 => "approaching maximum fluff", 6 _ => "sublime. the softest boi!" 7 }

Like in C++, the stuff inside the angle brackets is our type arguments. But unlike C++ templates, we are able to parametrise the function. We're able to say "this function is only for types that are Rateable". That's not something you can do in C++!5 This has consequences beyond readability. Trait bounds on type arguments means the Rust compiler can type check the function once, rather than having to check each concrete instantiation separately. This means faster compilation and clearer compiler error messages.

You can also use traits dynamically, which isn't preferred as it has a runtime penalty, but is sometimes necessary. I decided it was best not to cover that in this post.

One other big part of traits is the interoperability that comes from standard

traits, like Add and Display. Implementing add means you can add a type

together with the + operator, implementing Display means you can print it.

Rust tools

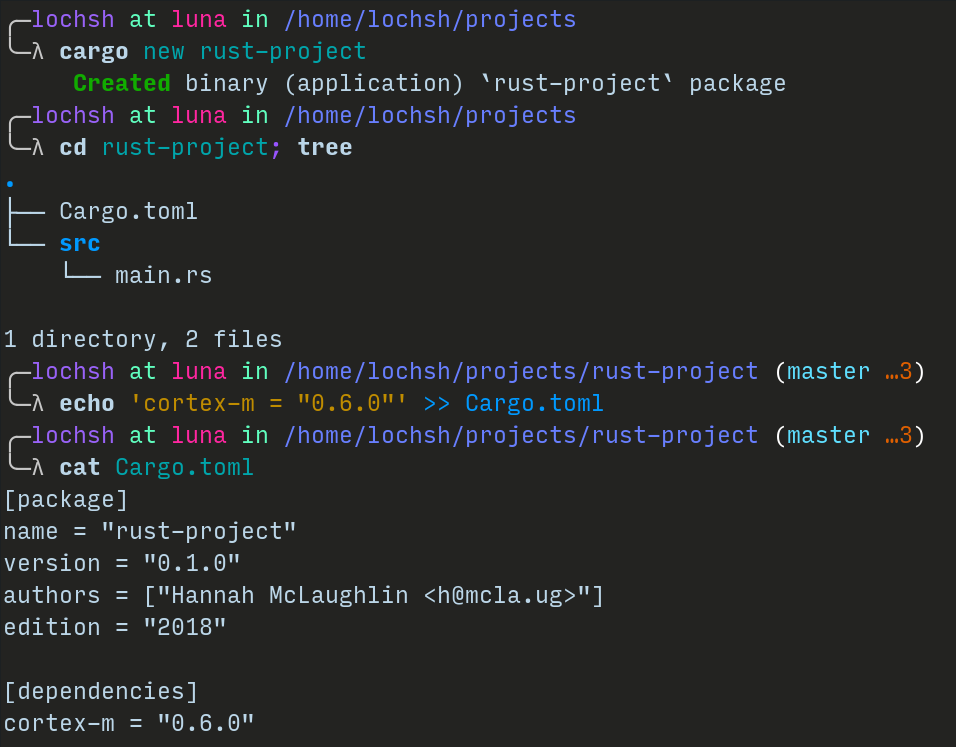

C and C++ don't have a standard way to manage dependencies. There's a few different tools for doing this, I haven't heard great things about any of them. Using plain Makefiles for your build system is very flexible, but can be rubbish to maintain. CMake reduces the maintenance burden but is less flexible which can be frustrating.

Rust really shines in this respect. Cargo is the one and only tool used in the Rust community for dependency management, packaging and for building and running your code. It's similar in many ways to Pipenv and Poetry in Python. There's an official package repository to go along with it. I don't have a lot more to say about this! It's really nice to use and it makes me sad that C and C++ don't have the same thing.

Should we all use Rust?

There's no universal answer for this. It depends on your application, as with any programming language. Rust is already being used very successfully in many different places. Microsoft use it for Azure IoT stuff, Mozilla sponsor Rust and use it for parts of the Firefox web browser, and many smaller companies are using it too.

So depending on your application, it's very much production ready.

But for some applications you might find support immature or lacking.

Embedded

In the embedded world, how ready Rust is depends on what you are doing. There are mature resources for Cortex-M that are used in production, and there's a developing but not yet mature RISC-V toolchain.

For x86 and arm8 bare metal the story is also good, like for Raspberry Pis. For more vintage architectures like PIC and AVR there isn't great support but I don't think for most new projects that should be a big issue.

Cross compilation support is good for all LLVM targets because the Rust compiler uses LLVM as its backend.

One thing where embedded Rust is lacking is there are no production grade RTOSs, and HALs are less developed. This isn't an insurmountable issue for many projects, but it would certainly hamper many too. I expect this to continue growing in the next couple years.

Async

One thing that definitely isn't ready is language async support which is still in development. They're still deciding what the async/await syntax should look like.

Interoperability

As for interoperability with other languages, there's a good C FFI in Rust, but you have to go through that if you want to call Rust from C++ or vice versa. That's very common in many languages, I don't expect it to change. I mention it because it would make it a bit of a pain to incorporate Rust into an existing C++ project: you'd need a C layer between the Rust and C++ and that would potentially be adding a lot of complexity.

Final thoughts

Were I starting from scratch on a new project at work I would definitely vouch for Rust. I'm really hopeful that it represents a better future for software – one that's more reliable, more secure and more enjoyable to write.

-

from Ian Barland's "Why C and C++ are Awful Programming Languages" ↩

-

The value categories used to just be lvalues (which has an identifiable place in memory) and rvalues (which do no not, like literals). It is now much more complicated. ↩

-

Yes...I should have had a test already. But for various unjustified reasons I didn't write a test that covered this particular code until later. ↩

-

Note: cheekily, I included this code that would not actually compile. You’d need the return type to be

&’static str. This is “lifetime annotation” and was outside the scope of my talk, and this blog post. Read about it here. ↩ -

I hear a lot of people say that Concepts in C++20 are analogous to traits, but this isn't true. I explain why here ↩